Summary

In this post, I'll dig into a common problem faced by developers:

Same-origin policy. A relaxation of that policy is known as Cross-origin Resource Sharing (

CORS). I'm going to focus on the challenges with CORS in the Google Cloud environment.

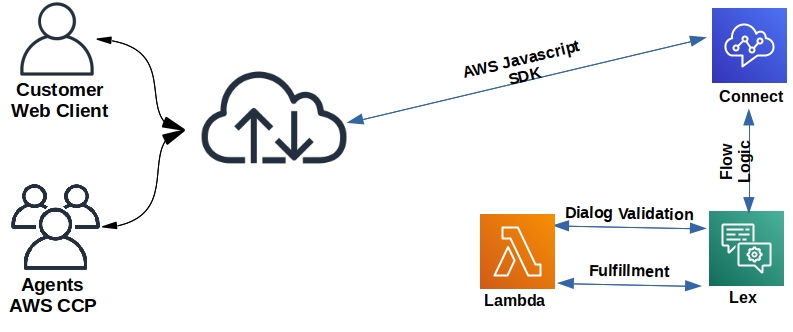

Architecture

Below is the test environment I'll be using for the following four different scenarios:

- Scenario 1: Publicly-accessible (no authentication) Cloud Function call with no CORS support from a Cloud Storage hosted static website.

- Scenario 2: Public Cloud Function call with CORS support from the static website.

- Scenario 3: Private Cloud Function call (authentication required) with CORS support from the static website.

- Scenario 4: Proxied Cloud Function call to a private Cloud Function. Proxy function is publically accessible and provides CORS support.

Scenario 1: Public Function, No CORS Support

Cloud Function

exports.pubGcfNoCors = (req, res) => {

res.set('Content-Type', 'application/json');

let result;

switch (req.method) {

case 'POST':

result = {

result: 'POST processed'

};

res.status(201).json(result);

break;

case 'GET' :

result = {

result: 'GET processed'

};

res.status(200).json(result);

break;

case 'PUT' :

result = {

result: 'PUT processed'

};

res.status(200).json(result);

break;

case 'DELETE' :

result = {

result: 'DELETE processed'

};

res.status(200).json(result);

break;

default:

res.status(405).send(`${req.method} not supported`);

}

};

CURL Output

Success. The function performs as expected from a CURL call.

$ curl https://us-west3-corstest-294418.cloudfunctions.net/pubGcfNoCors

{"result":"GET processed"}

Web Page (static HTML)

This static website is hosted on the domain corstest.sysint.club. Line 16 below performs a fetch to a website outside of that domain (cloud function). This sets up the same-origin conflict.

<!DOCTYPE html>

<html>

<head>

<title>Google Cloud Function CORS Demo</title>

<meta charset="UTF-8">

</head>

<body>

<h1>Public Google Cloud Function, No CORS Support</h1>

<input type="button" id="gcf" onclick="gcf()" value="Call GCF">

<script>

async function gcf() {

const url = 'https://us-west3-corstest-294418.cloudfunctions.net/pubGcfNoCors';

const response = await fetch(url, {

method: 'GET'

});

console.log('response status: ' + response.status);

if (response.ok) {

let json = await response.json();

console.log('response: ' + JSON.stringify(json));

}

}

</script>

</body>

</html>

Browser Output

Fail. Below is the expected results when the function is called: same-origin conflict triggers the browser to prevent the fetch to the cloud function:

Scenario 2: Public Function with CORS Support

Cloud Function

Line 3 provides the critical header necessary to allow the function call to be executed in a browser environment.

exports.pubGcfCors = (req, res) => {

res.set('Content-Type', 'application/json');

res.set('Access-Control-Allow-Origin', 'http://corstest.sysint.club');

let result;

switch (req.method) {

case 'POST':

result = {

result: 'POST processed'

};

res.status(201).json(result);

break;

case 'GET' :

result = {

result: 'GET processed'

};

res.status(200).json(result);

break;

case 'PUT' :

result = {

result: 'PUT processed'

};

res.status(200).json(result);

break;

case 'DELETE' :

result = {

result: 'DELETE processed'

};

res.status(200).json(result);

break;

default:

res.status(405).send(`${req.method} not supported`);

}

};

Browser Output

Success. The function call succeeds here; however, the function is open to be called by anyone. Its permissions has allUsers listed as a Function Invoker.

Scenario 3: Private (authenticated) Function with CORS Support

Cloud Function

Below I've added both the allowed origin header and support for CORS preflighting (OPTIONS).

exports.privGcfCors = (req, res) => {

res.set('Content-Type', 'application/json');

res.set('Access-Control-Allow-Origin', 'http://corstest.sysint.club');

let result;

switch (req.method) {

case 'OPTIONS' :

res.set('Access-Control-Allow-Methods', 'POST, GET, PUT, DELETE');

res.set('Access-Control-Allow-Headers', 'Authorization');

res.set('Access-Control-Max-Age', '3600');

res.status(204).send('');

break;

case 'POST':

result = {

result: 'POST processed'

};

res.status(201).json(result);

break;

case 'GET' :

result = {

result: 'GET processed'

};

res.status(200).json(result);

break;

case 'PUT' :

result = {

result: 'PUT processed'

};

res.status(200).json(result);

break;

case 'DELETE' :

result = {

result: 'DELETE processed'

};

res.status(200).json(result);

break;

default:

res.status(405).send(`${req.method} not supported`);

}

};

CURL Output

Success. Excerpt of a CURL call to this function with a Google Authentication token. Works as expected.

curl -v https://us-west3-corstest-294418.cloudfunctions.net/privGcfCors \

-H "Authorization: Bearer eyJhbGciOiJSUzI1NiIsImtpZCI6ImYwOTJiNjEyZTliNjQ0N2RlYjEwNjg1YmI4ZmZhOGFlNjJmNmFhOTEiLC"

< HTTP/2 200

< access-control-allow-origin: http://corstest.sysint.club

< content-type: application/json; charset=utf-8

< etag: W/"1a-fWnKK8jLd+Ggo6nxcFzkss7mXew"

< function-execution-id: fe2h4j6lnolk

< x-powered-by: Express

< x-cloud-trace-context: 476e70bffcca5a4ee942d27752f18f4c;o=1

< date: Fri, 06 Nov 2020 20:15:30 GMT

< server: Google Frontend

< content-length: 26

< alt-svc: h3-Q050=":443"; ma=2592000,h3-29=":443"; ma=2592000,h3-T051=":443";

{"result":"GET processed"}

Web Page

Support added to the web page for Google authentication. A Google JWT token is fetched and then sent via an Authorization header to the private cloud function.

<!DOCTYPE html>

<html>

<head>

<title>Google Cloud Function CORS Demo</title>

<meta charset="UTF-8">

<meta name="google-signin-scope" content="profile">

<meta name="google-signin-client_id" content="54361920328-624lh96v98erlacmp5u92ds5nhjg1kqq.apps.googleusercontent.com">

<script src="https://apis.google.com/js/platform.js" async defer></script>

</head>

<body>

<h1>Private Google Cloud Function with CORS Support</h1>

<div class="g-signin2" data-onsuccess="signIn" data-theme="dark"></div>

<input type="button" id="gcf" onclick="gcf()" value="Call GCF" style="display: none;">

<script>

let id_token;

function signIn(googleUser) {

const profile = googleUser.getBasicProfile();

const name = profile.getName();

id_token = googleUser.getAuthResponse().id_token;

console.log("User: " + name);

console.log("ID Token: " + id_token);

document.getElementById("gcf").style.display = "block";

}

async function gcf() {

const url = 'https://us-west3-corstest-294418.cloudfunctions.net/privGcfCors';

const response = await fetch(url, {

method: 'GET',

headers: {

'Authorization': 'Bearer ' + id_token

}

});

console.log('response status: ' + response.status);

if (response.ok) {

let json = await response.json();

console.log('response: ' + JSON.stringify(json));

}

}

</script>

</body>

</html>

Browser Output

Fail. This is where things get interesting. Even though CORS and authentication are handled in the cloud function and web page, execution of the cloud function still fails. Network view below. The reason behind the failure is apparent: the CORS preflight (OPTIONS) request fails. I consider this a bug with Cloud Functions and integration of Functions with Cloud Endpoints via Cloud Run. Google is calling this a feature request vs bug. In any case, neither platform handles CORS preflighting properly in an authenticated environment. Both are looking for an authentication header with that OPTIONS call. That doesn't happen with any browser - which is per the spec.

Scenario 4: Proxied calls to the Private Cloud Function

One workaround for this to put everything into one domain. That eliminates the CORS preflight trigger. Another option is a custom proxy for the private Cloud Function. Remember, the productized solution (Cloud Endpoints) is not a solution at the time of this writing. It suffers from the same CORS preflight problem with authentication.

Architecture

Proxy in a Cloud Function

This is a publicly-accessible Cloud Function that leverages the http-proxy module to relay requests/responses to a target URL passed a query param. That target URL represents the private Cloud Function. That private function now no longer needs any CORS handling code. All CORS interactions happen with the Proxy function.

const httpProxy = require ('http-proxy');

exports.gcfProxy = (req, res) => {

res.set('Access-Control-Allow-Origin', 'http://corstest.sysint.club');

const proxy = httpProxy.createProxyServer({});

switch (req.method) {

case 'OPTIONS' :

res.set('Access-Control-Allow-Methods', 'POST, GET, PUT, DELETE');

res.set('Access-Control-Allow-Headers', 'Authorization');

res.set('Access-Control-Max-Age', '3600');

res.status(204).send('');

break;

case 'POST':

case 'GET':

case 'PUT':

case 'DELETE':

proxy.web(req, res, { target : req.query.target });

break;

default:

res.status(405).send(`${req.method} not supported`);

}

};

CURL Output

Success.

curl -v https://us-west3-corstest-294418.cloudfunctions.net/gcfProxy?target=\

https://us-west3-corstest-294418.cloudfunctions.net/privGcfNoCors \

-H "Authorization: Bearer eyJhbGciOiJSUzI1NiIsImtpZCI6ImYwOTJiNjEyZTliNjQ0N2RlYjEwNjg1YmI4ZmZhOGFlNjJmNmFhOTEi"

< HTTP/2 200

< access-control-allow-origin: http://corstest.sysint.club

< alt-svc: h3-Q050=":443"; ma=2592000,h3-29=":443"; ma=2592000,h3-T051=":443";

< alt-svc: h3-Q050=":443"; ma=2592000,h3-29=":443"; ma=2592000,h3-T051=":443";

< function-execution-id: x4lxp9wh8gm5

< function-execution-id: x4lxp9wh8gm5

< x-cloud-trace-context: 3cf2d592ced9b73ba25e6b361d6197c4;o=1

< x-cloud-trace-context: 3cf2d592ced9b73ba25e6b361d6197c4;o=1

< x-powered-by: Express

< date: Fri, 06 Nov 2020 21:05:07 GMT

< server: Google Frontend

< content-length: 26

<

* Connection #0 to host us-west3-corstest-294418.cloudfunctions.net left intact

{"result":"GET processed"}

Browser Output

Success.

Copyright ©1993-2024 Joey E Whelan, All rights reserved.