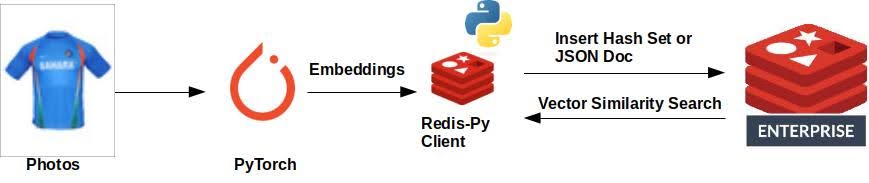

Summary

I'll show some examples of how to utilize vector similarity search (VSS) in Redis. I'll generate embeddings from pictures, store the resulting vectors in Redis, and then perform searches against those stored vectors.

Architecture

Data Set

I used the Fashion Dataset on Kaggle for the photos to vectorize and store in Redis.

Application

The sample app is written in Python and organized as a single class. That class does a one-time load of the dataset photos as vectors and stores the vectors in a file. That file is subsequently used to store the vectors and their associated photo IDs in Redis. Vector searches of other photos can then be performed against Redis.

Embedding

The code below reads a directory containing the dataset photos, vectorizes each photo, and then writes those vectors to file. As mentioned, this is a one-time operation.

if (not os.path.exists(VECTOR_FILE) and len(os.listdir(IMAGE_DIR)) > 0):

img2vec = Img2Vec(cuda=False)

images: list = os.listdir(IMAGE_DIR)

images = images[0:NUM_IMAGES]

with open(VECTOR_FILE, 'w') as outfile:

for image in images:

img: Image = Image.open(f'{IMAGE_DIR}/{image}').convert('RGB').resize((224, 224))

vector: list = img2vec.get_vec(img)

id: str = os.path.splitext(image)[0]

json.dump({'image_id': id, 'image_vector': vector.tolist()}, outfile)

outfile.write('\n')

Redis Data Loading

Redis supports VSS for both JSON and Hash Set data types. I parameterized a function to allow the creation of Redis VSS indices for either data type. One important difference in working with JSON or Hashes with Redis VSS: vectors can be stored as is (array of floats) for JSON documents, but need to be reduced to a BLOB for Hash Sets.

def _get_images(self) -> dict:

with open(VECTOR_FILE, 'r') as infile:

for line in infile:

obj: object = json.loads(line)

id: str = str(obj['image_id'])

match self.object_type:

case OBJECT_TYPE.HASH:

self.image_dict[id] = np.array(obj['image_vector'], dtype=np.float32).tobytes()

case OBJECT_TYPE.JSON:

self.image_dict[id] = obj['image_vector']

def _load_db(self) -> None:

self.connection.flushdb()

self._get_images()

match self.object_type:

case OBJECT_TYPE.HASH:

schema = [ VectorField('image_vector',

self.index_type.value,

{ "TYPE": 'FLOAT32',

"DIM": 512,

"DISTANCE_METRIC": self.metric_type.value

}

),

TagField('image_id')

]

idx_def = IndexDefinition(index_type=IndexType.HASH, prefix=['key:'])

self.connection.ft('idx').create_index(schema, definition=idx_def)

pipe: Connection = self.connection.pipeline()

for id, vec in self.image_dict.items():

pipe.hset(f'key:{id}', mapping={'image_id': id, 'image_vector': vec})

pipe.execute()

case OBJECT_TYPE.JSON:

schema = [ VectorField('$.image_vector',

self.index_type.value,

{ "TYPE": 'FLOAT32',

"DIM": 512,

"DISTANCE_METRIC": self.metric_type.value

}, as_name='image_vector'

),

TagField('$.image_id', as_name='image_id')

]

idx_def: IndexDefinition = IndexDefinition(index_type=IndexType.JSON, prefix=['key:'])

self.connection.ft('idx').create_index(schema, definition=idx_def)

pipe: Connection = self.connection.pipeline()

for id, vec in self.image_dict.items():

pipe.json().set(f'key:{id}', '$', {'image_id': id, 'image_vector': vec})

pipe.execute()

Search

With vectors loaded and indices created in Redis, a vector search looks the same for either JSON or Hash Sets. The search vector must be reduced to a BLOB. Searches can be strictly for vector similarity (KNN search) or a combination of VSS and a traditional Redis Search query (hybrid search). The function below is parameterized to support both.

def search(self, query_vector: list, search_type: SEARCH_TYPE, hyb_str=None) -> list:

match search_type:

case SEARCH_TYPE.VECTOR:

q_str = f'*=>[KNN {TOPK} @image_vector $vec_param AS vector_score]'

case SEARCH_TYPE.HYBRID:

q_str = f'(@image_id:{{{hyb_str}}})=>[KNN {TOPK} @image_vector $vec_param AS vector_score]'

q = Query(q_str)\

.sort_by('vector_score')\

.paging(0,TOPK)\

.return_fields('vector_score','image_id')\

.dialect(2)

params_dict = {"vec_param": query_vector}

results = self.connection.ft('idx').search(q, query_params=params_dict)

return results

Source

https://github.com/Redislabs-Solution-Architects/vss-ops

Copyright ©1993-2024 Joey E Whelan, All rights reserved.