Summary

This is Part 2 of a two-part series on the implementation of a contact center ACD using Redis data structures. This part is focused on the network configuration. In particular, I explain the configuration of HAProxy load balancing with VRRP redundancy in a Redis Enterprise environment. To boot, I explain some of the complexities of doing this inside a Docker container environment.

Network Architecture

Load Balancing Configuration

Load Balancing Configuration

HAProxy w/Keepalived

Docker Container

Dockerfile and associated Docker compose script below for two instances of HAProxy w/keepalived. Note the default start-up for the HAProxy container is overridden with a CMD to start keepalived and haproxy.

Keepalived Config

VRRP redundancy of the two HAProxy instances is implemented with keepalived. Below is the config for the Master instance. The Backup instance is identical except for the priority.

Web Servers

I'll start with the simplest load-balancing scenario - web farm.

Docker Container

Below is the Dockerfile and associated Docker compose scripting for a 2-server deployment of Python FastAPI. Note that no IP addresses are assigned and multiple instances are deployed via Docker compose 'replicas'.

HAProxy Config

Below are the front and backend configurations. Note the use of Docker's DNS server to enable dynamic mapping of the web servers via a HAProxy server template.

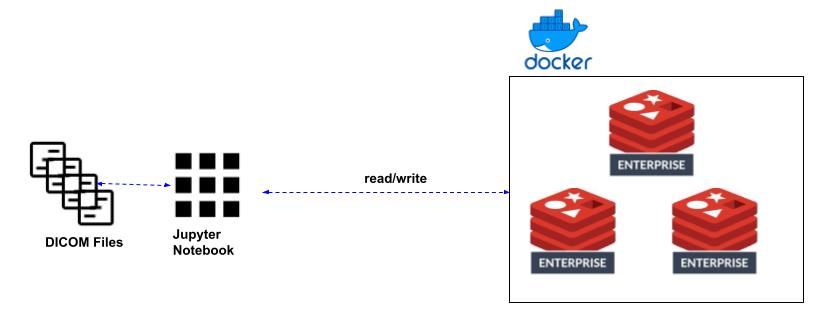

Redis Enterprise Components

Redis Enterprise can provide its own load balancing via internal DNS servers. For those that do not want to use DNS, external load balancing is also supported. Official Redis documentation on the general configuration of external load balancing is

here. I'm going to go into detail on the specifics of setting this up with the HAProxy load balancer in a Docker environment.

Docker Containers

A three-node cluster is provisioned below. Note the ports that are opened:

- 8443 - Redis Enterprise Admin Console

- 9443 - Redis Enterprise REST API

- 12000 - The client port configured for the database.

RE Database Configuration

Below is a JSON config that can be used via the RE REST API to create a Redis database. Note the proxy policy. "all-nodes" enables a database client connection point on all the Redis nodes.

RE Cluster Configuration

In the start.sh script, this command below is added to configure redirects in the Cluster (per the Redis documentation).

HAProxy Config - RE Admin Console

Redis Enterprise has a web interface for configuration and monitoring (TLS, port 8443). I configure back-to-back TLS sessions below with a local SSL cert for the front end. Additionally, I configure 'sticky' sessions via cookies.

HAProxy Config - RE REST API

Redis Enterprise provides a REST API for programmatic configuration and provisioning (TLS, port 9443). For this scenario, I simply pass the TLS sessions through HAProxy via TCP.

HAProxy Config - RE Database

A Redis Enterprise database can have a configurable client connection port. In this case, I've configured it to 12000 (TCP). Note in the backend configuration I've set up a Layer 7 health check that will attempt to create an authenticated Redis client connection, send a Redis PING, and then close that connection.

Source

https://github.com/redis-developer/basic-acd

Copyright ©1993-2024 Joey E Whelan, All rights reserved.

Load Balancing Configuration

Load Balancing Configuration