Summary

This post covers a demonstration of the usage of Redis for caching DICOM imagery. I use a Jupyter Notebook to step through loading and searching DICOM images in a Redis Enterprise environment.

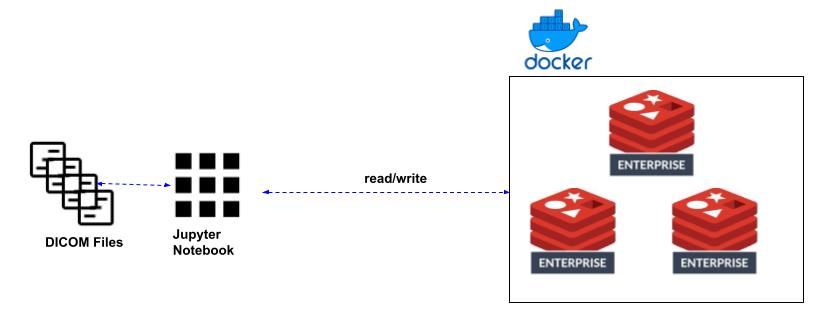

Architecture

Redis Enterprise Environment

Screen-shot below of the resulting environment in Docker.

Sample DICOM Image

I use a portion of sample images included with the Pydicom lib. Below is an example:

Code Snippets

Data Load

The code below loops through the Pydicom-included DICOM files. Those that contain the meta-data that is going to be subsequently used for some search scenarios are broken up into 5 KB chunks and stored as Redis Strings. Those chunks and the meta-data are then saved to a Redis JSON object. The chunks' Redis key names are stored as an array in that JSON object.

Search Scenario 1

This code retrieves all the byte chunks for a DICOM image where the Redis key is known. Strictly, speaking this isn't a 'search'. I'm simply performing a JSON GET for a key name.

Search Scenario 2

The code below demonstrates how to put together a Redis Search on the image meta-data. In this case, we're looking for a DICOM image with a protocolName of 194 and studyDate in 2019.

Source

Copyright ©1993-2024 Joey E Whelan, All rights reserved.