Summary

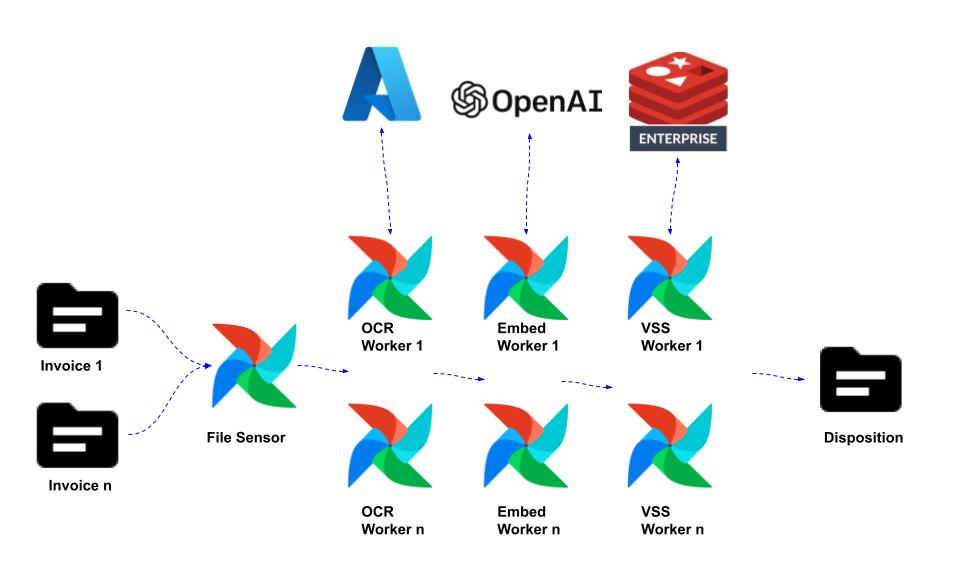

In this post, I cover an approach to a document AI problem using a task flow implemented in Apache Airflow. The particular problem is around the de-duplication of invoices. This comes up in payment provider space. I use Azure AI Document Intelligence for OCR, Azure OpenAI for vector embeddings, and Redis Enterprise for vector search.

Architecture

Code Snippets

File Sensor DAG

OCR DAG

OCR Client (Azure AI Doc Intelligence)

Embedding DAG

Embedding Client (Azure OpenAI)

Vector Search DAG

Vector Search Client (Redis Enterprise)

Source

Copyright ©1993-2024 Joey E Whelan, All rights reserved.